Chill Out!

Just did a simple tempco test on two coils. The first was coil #3 from the Q testing:

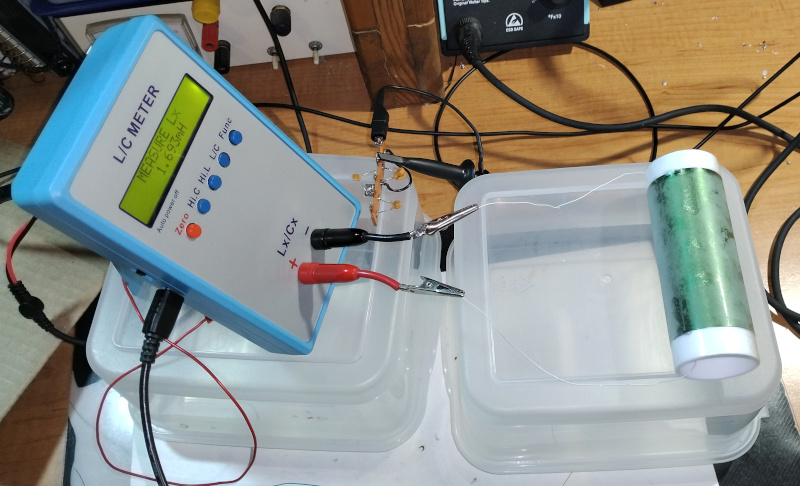

Stuck it in a -20 C freezer for an hour, then connected it to my LC meter. As you can see on the meter in the photo, the frosty coil measured 1.693 mH, and this didn't change by even one count over the course of warming up to 17 C room temperature. Which means the coil is stable to better than:

0.001 / 1.693 / (17 - (-20)) = 16 ppm / C

I'd have to connect it to the D-Lev to measure with greater resolution and get an actual number here. For reference, a typical crystal oscillator is good for around 0.25 ppm / C.

The second coil tempco tested was the Bourns 6310-RC RF choke which employs a ferrite form (testing shown here: http://www.thereminworld.com/forums/T/28554?post=224024#224024). It measured 50.32 mH @ -20 C and 51.36 mH @ 17 C:

(51.36 - 50.32) / 51.36 / (17 - (-20)) = 547 ppm / C

So the ferrite Bourns tempco is at the very least 547 / 16 = 34 times worse than the air core solenoid! So on the basis of this simple experiment alone, I will not be using ferrite coils in any of my designs.